Sign In

April 17, 2025

Mastering LLMs: Essential Prompting Techniques for AEC Professionals

by VIKTOR Team

How do LLMs work?

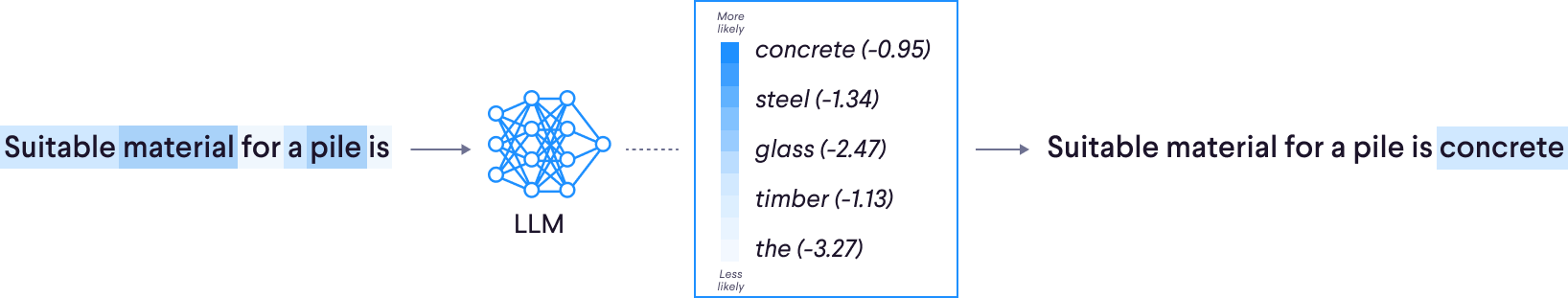

LLMs use cutting-edge neural networks to forecast the most likely next word in a sequence, analyzing context, patterns, and semantic connections. They harness extensive training datasets to produce human-like text and resolve intricate problems. These AI models implement sophisticated methodologies such as Attention (machine learning) and Transformer (deep learning architecture), enabling them to process and interpret information in ways similar to human cognition.

The Rise of AI in AEC

AI is increasingly being integrated in the AEC sector, with LLMs leading the charts with capabilities like architectural design, structural engineering, and construction project management. From supporting complex structural calculations to enhancing designs for energy efficiency, AI tools are being incorporated into many AEC software platforms to boost productivity and refine decision-making processes.

To fully use the potential of LLMs, AEC professionals must become prompting professionals as well. Prompting is quickly becoming a key skill when working in an industry that's becoming more and more AI-driven. As technology like this keeps evolving, it's bound to continue changing the way we work, redefining traditional AEC workflows and paving the way for groundbreaking innovations in the built environment worldwide.

How to get started today

One of the amazing things about LLMs is that they are very beginner friendly, and the possibilities are endless. Mastering the capabilities is something you will learn, mostly through trial and error – and finding out what works for you.

To get you started, here are four basic LLM applications that will immediately help you save time.

- Text generation: quickly consider how many lines of text you are writing per day, a few emails, some documenting of a project, another memo here and there? We write thousands of words per week, and every sentence takes effort. LLMs, given the right context, can take away some of that effort and generate the perfect abstract for your report.

- Code generation: imagine you're an engineer struggling with coding. Using an LLM is like having a tech-savvy colleague sit next to you who can ask to help write a script at any time of the day. The LLM may not always generate perfectly working code, but it can write 95% of it, including detailed descriptions. All that remains for you is some debugging and checking the logic. The days of struggling to write line per line are over!

- Summarizing: An LLM is like having a super-fast reader with perfect memory on your team. It can quickly process long documents or reports that would take you hours to read. Once it's done, you can ask it anything about the content - from project delays to budget issues. The LLM can instantly pick out this information and even tell you which page to check for more details.

- Ideation: An LLM can also be your creative sidekick. Stuck on a design? Describe your problem, and it'll suggest various solutions. It can draw from different styles and techniques, offering fresh perspectives you might not have considered. It's like brainstorming with a team of experts, available whenever you need inspiration. True creative spark still comes from human expertise but having someone to spar with can help a lot!

Prompting essentials

So now that we have some ideas on what you can use LLMs for, let’s dive into some ways to get started effectively.

Following these three principles, you will make sure that the LLM has a good understanding of your problem, the task, and the solution it needs to bring to the table, similar to how you would need information to complete a task yourself.

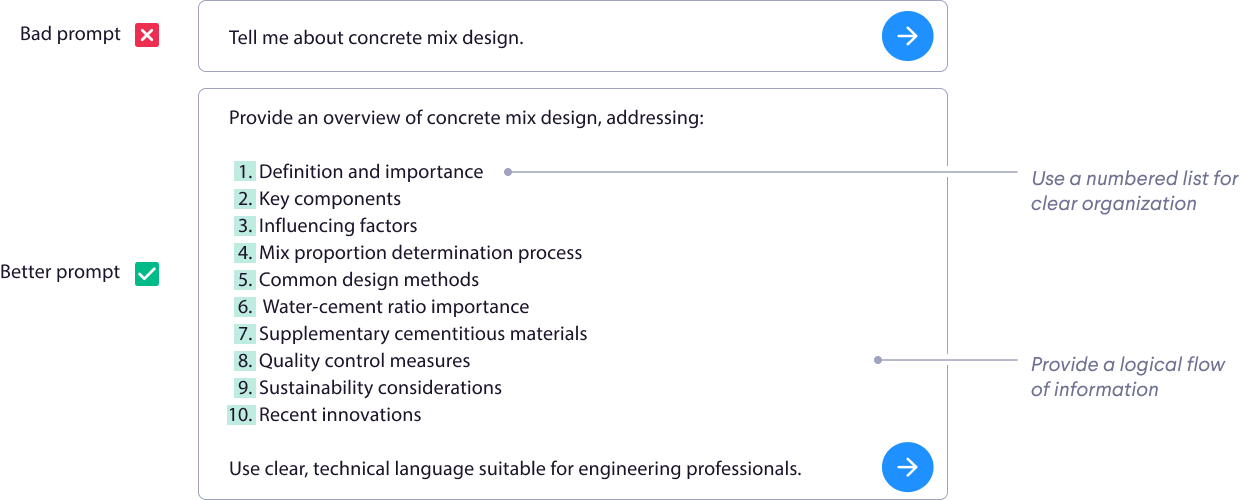

- Structured input and output: stating the expected output – whether this is an image, text, or code – will remove any improvisation the model has to perform. Similarly, if you know the input is text, a series of numbers, or even a file, tell the LLM this, just like how you would also like to know about the file your colleague would send you. You can instruct an LLM to give information on a certain topic. In the example below, you see how you can create an overview of all the information you want, creating guidelines for the LLM to follow as it generates the output.

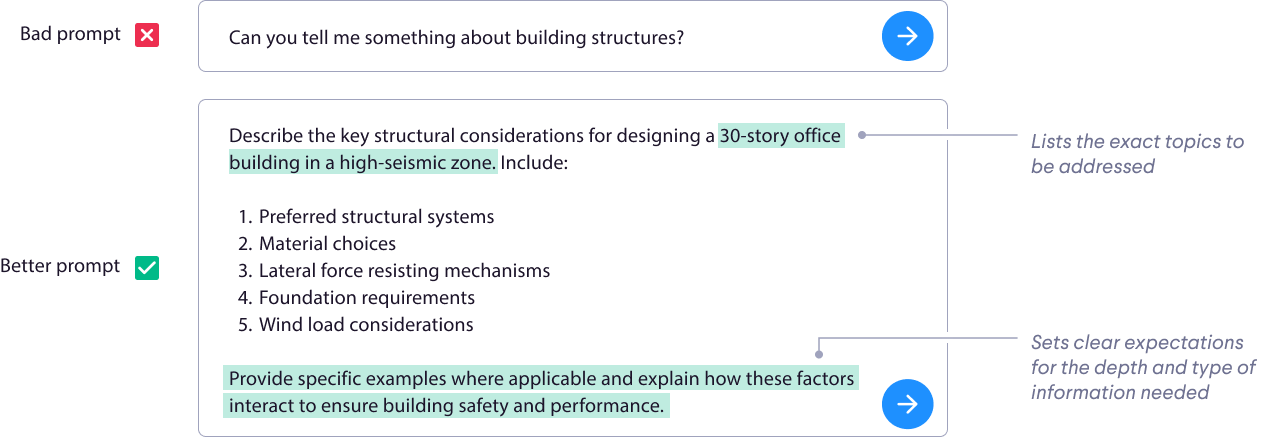

- Clarity and specificity: LLMs heavily rely on the context you provide. By giving clear descriptions of the problem, an LLM will identify the limits and possibilities. It is also important to realize that an LLM can follow a step-by-step plan very well. Once you have those inputs and outputs structured, adding clear instructions is easy.

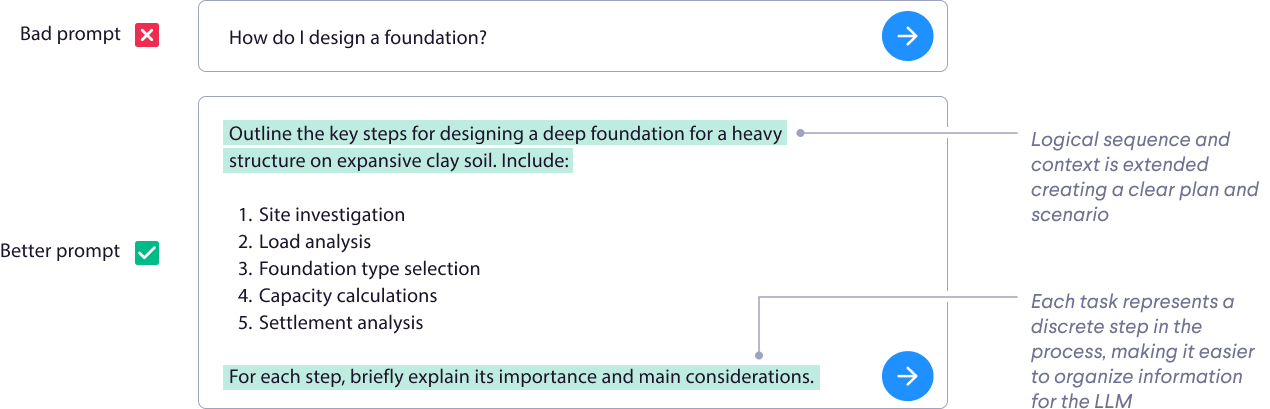

- Task decomposition: By splitting the complex tasks into smaller bite size chunks, the LLM will be able to tackle the sub-problems much better than trying to solve it all at once. Especially with code generation, any error you encounter can be fixed as you are developing the script together.

Prompting best practices

So now that you know how to write good prompts for your tasks, let’s cover the 4 best practices to help you achieve your goals fast, using as few prompts as possible.

- Give the model room to think: Just like a human, having some room to think lets an LLM consider ‘the best option’ for a certain solution. Over-constraining an LLM is not ideal, and unlike a human, an LLM will not complain about a small set of options unless you specifically tell it to. Knowing this, always consider if you are over-constraining the LLM, which might cause issues. You may also consider asking for alternatives. If there is no room for variation, you will discover that you won't be able to get a significantly different result. In the example below, you can see how asking for multiple options lets an LLM consider and reason through different design options.

Example: "I'm designing a footbridge across a small river with limited space for foundations. List different structural systems that could work and compare them in terms of material efficiency, cost, and constructability."

- Specify formats: By being explicit about formats, especially when trying to generate code, the LLM will have a clear understanding of what to expect and what to output. Although this contradicts the previous best practice, you want this constraint because the input usually comes from elsewhere, and the output may need to be processed by someone or another program; in each case, another format may be desirable. In the following example, you can see how specifying a file format and the keys of the data structure can help an LLM with its output:

"Generate a Python function that calculates the axial stress in a structural member. Return the result as a JSON object with keys: 'force_N', 'area_mm2', and 'stress_MPa'."

- Effectively using delimiters: Delimitations in the context of LLMs are special characters or sequences used to separate different parts of a prompt. You can use this to create sections in your prompt, indicate importance, and structure your prompt for the LLM. This will improve clarity, enhance consistency, and make it easier for the LLM to parse the input in a structured way. Here is a table with some commonly used delimiters for LLMs.

| Delimiter | Example | Typical Use |

|---|---|---|

| Triple quotes """ | """text""" | Enclosing large blocks of text or code |

| Triple backticks | text``` | Highlighting code snippets or structured content |

| Angle brackets < > | <instruction> | Marking specific instructions or roles |

| Square brackets [ ] | [context] | Providing additional context or placeholders |

| Delimiter | Example | Typical Use |

|---|---|---|

| Curly braces { } | {variable} | Indicating variables or replaceable elements |

| Dashes --- | ---Section--- | Separating different sections of a prompt |

| Equals signs === | ===Category=== | Denoting categories or major divisions |

| Colons : | Role: Assistant | Specifying roles or attributes |

| Pipe symbols | | Option A | Option B | Separating options or alternatives |

| Asterisks * | *important* | Emphasizing key words or phrases |

Set goals & create a scenario: Start your prompt by clearly stating what you want to achieve, be specific by using a measurable outcome. By creating a scenario, you can then set the stage with relevant background information (context) and define constraints. Doing this together with delimiters will let you categorize a goal and constraint, which is very helpful to the LLM!

Example:

[GOAL]: Develop a slope stabilization plan to prevent landslides on a 45-degree highway cut with a factor of safety of at least 1.5.

[SCENARIO]: You're consulting on a new highway project through mountainous terrain. The area experiences heavy rainfall and has a history of landslides.

[CONSTRAINTS]: The stabilization method must be environmentally friendly and allow for native vegetation growth. The project timeline is 6 months.

Conclusion

Large Language Models are transforming the AEC industry, offering powerful tools for text generation, coding, analysis, and creative problem-solving. By mastering prompting techniques, engineers can immediately enhance their productivity and decision-making processes by extending their skillset with LLMs. An engineer who may not be a proficient coder can now generate high quality code as if it is their own.

The future of AEC lies in effectively combining human expertise with AI capabilities. Don't wait – start exploring LLMs today to stay ahead in this rapidly evolving field. Embracing these tools now will position you at the forefront of innovation in the built environment, leading to more efficient, creative, and sustainable projects.

Want to learn about how you can combine the power of AI with VIKTOR.AI - try it now!